Rethinking generative AI from the ground up: Inside the TODO project with Alain Durmus

In recent years, generative AI has intrigued the world with its ability to produce strikingly realistic images, videos, and sounds. But behind the surface-level magic lies a complex architecture, one that, for all its success, often operates more like a black box than a transparent scientific system.

Alain Oliviero Durmus (École polytechnique – Institut Polytechnique de Paris), a Hi! PARIS chair in the 2025 cohort, believes it doesn’t have to be this way. His project, Toward Enhanced Generative Models (TODO), aims to bridge the gap between powerful generative tools and the mathematical theory needed to fully understand and improve them.

“We’re in a moment where generative models are everywhere, but we still don’t fully understand why they work,” says Durmus. “My goal is to change that.”

From noise to knowledge

At the core of Durmus’s research is a pair of increasingly prominent tools in AI: diffusion and flow generative models. These systems take random noise and transform it, step by step, into complex data, be it an image, a molecule, or even a physical simulation. Their success has been undeniable, but their foundations remain shaky.

“Right now, most of the models are designed by trial and error,” he explains. “We tweak parameters, adjust noise schedules, and hope it works. But we don’t always know why it works, or when it might fail.”

That lack of theoretical clarity has real consequences: unstable training, unpredictable failures, and challenges in extending these models to new domains like biology, economics, or climate science. Durmus’s project aims to bring order to the chaos.

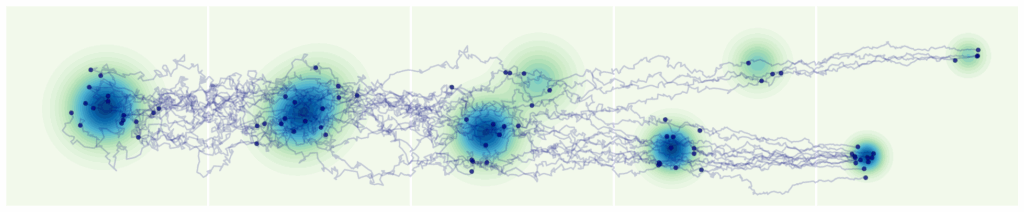

To help visualize the kind of structure these models can achieve, the following figure shows a diffusion-based sampler designed to capture complex, multi-modal distributions.

Figure: Learned reference-based diffusion sampler capturing multi-modal distributions.

Credit: Noble, M., Grenioux, L., Gabrié, M., & Durmus, A. O. (ICLR 2025).

A unifying mathematical framework

The heart of Durmus’s proposal is to view these generative processes through the lens of stochastic optimal control (SOC). It’s a powerful mathematical tool traditionally used in fields like robotics and finance. By applying SOC to generative AI, he hopes to build a theory that doesn’t just explain how these models work, it shows how to make them better.

“Imagine guiding a cloud of particles from pure randomness toward structured data,” Durmus says. “SOC helps us define the best way to do that, with mathematical guarantees on stability, convergence, and performance.”

In this framing, the learning process becomes more than a guessing game. Score functions and velocity fields, the mathematical engines behind diffusion and flow models, are recast as optimal control policies. The value function, a key concept in SOC, becomes the compass that directs the generative journey.

Theory for practice

This isn’t just a theoretical exercise. The insights from Durmus’s work could radically improve how generative AI is built and used. From choosing the right noise schedules to ensuring reliability in data-scarce environments, the project offers a roadmap for AI systems that are not just powerful, but trustworthy.

“We want to move away from endless hyperparameter tuning toward principled design,” he says. “That means models that train faster, generalize better, and provide reliable uncertainty estimates.”

Where GenAI goes next

While most popular applications of generative AI focus on visuals, Durmus is thinking bigger. His framework extends naturally to structured and complex data, like molecular graphs, geometric manifolds, or time-evolving systems. By embedding the control process into these domains, his approach opens the door to generative models that respect physical laws, symmetries, and domain-specific constraints.

This is especially relevant in scientific fields where precision and interpretability matter deeply, from drug discovery to materials science.

Safer, smarter AI

As generative models begin to condition real-world decisions, safety and reliability are non-negotiable. Durmus’s work addresses this head-on, introducing ways to quantify uncertainty, detect overfitting, and understand when a model is pushing beyond its limits.

“We can treat memorization and mode collapse as failures in control,” he explains. “That gives us tools to fix them, not just observe them.”

Turning theory into tools

Durmus’s project is ambitious, but its potential is equally vast. By bringing rigorous mathematics into the heart of generative AI, it offers a path toward models that are not only more efficient, but also more ethical, interpretable, and dependable.

“We’re not just building better models,” he concludes. “We’re trying to build a science of generative AI, one that can be trusted in the lab, the clinic, or the factory floor.”

Stay tuned for more profiles of our 2025 chairs, highlighting their work in AI & Society, AI & Business, and AI & Science.